Featured Projects

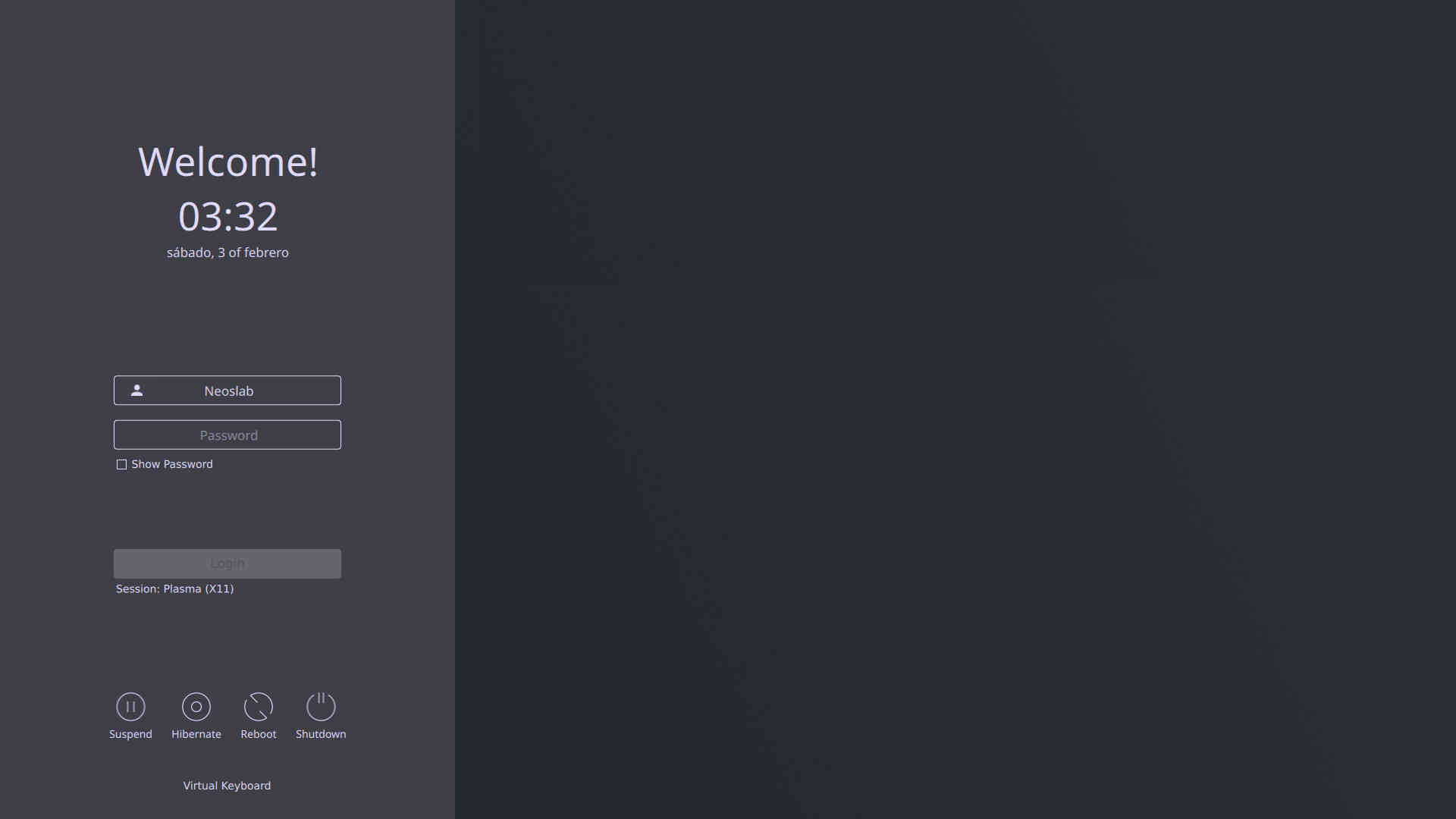

SnoopGod is more than an operating system, it is a Free Open Source Community Project with the aim of promoting the culture of security in IT environment and give its contribution to make it better and safer.

Tools for Every Situation

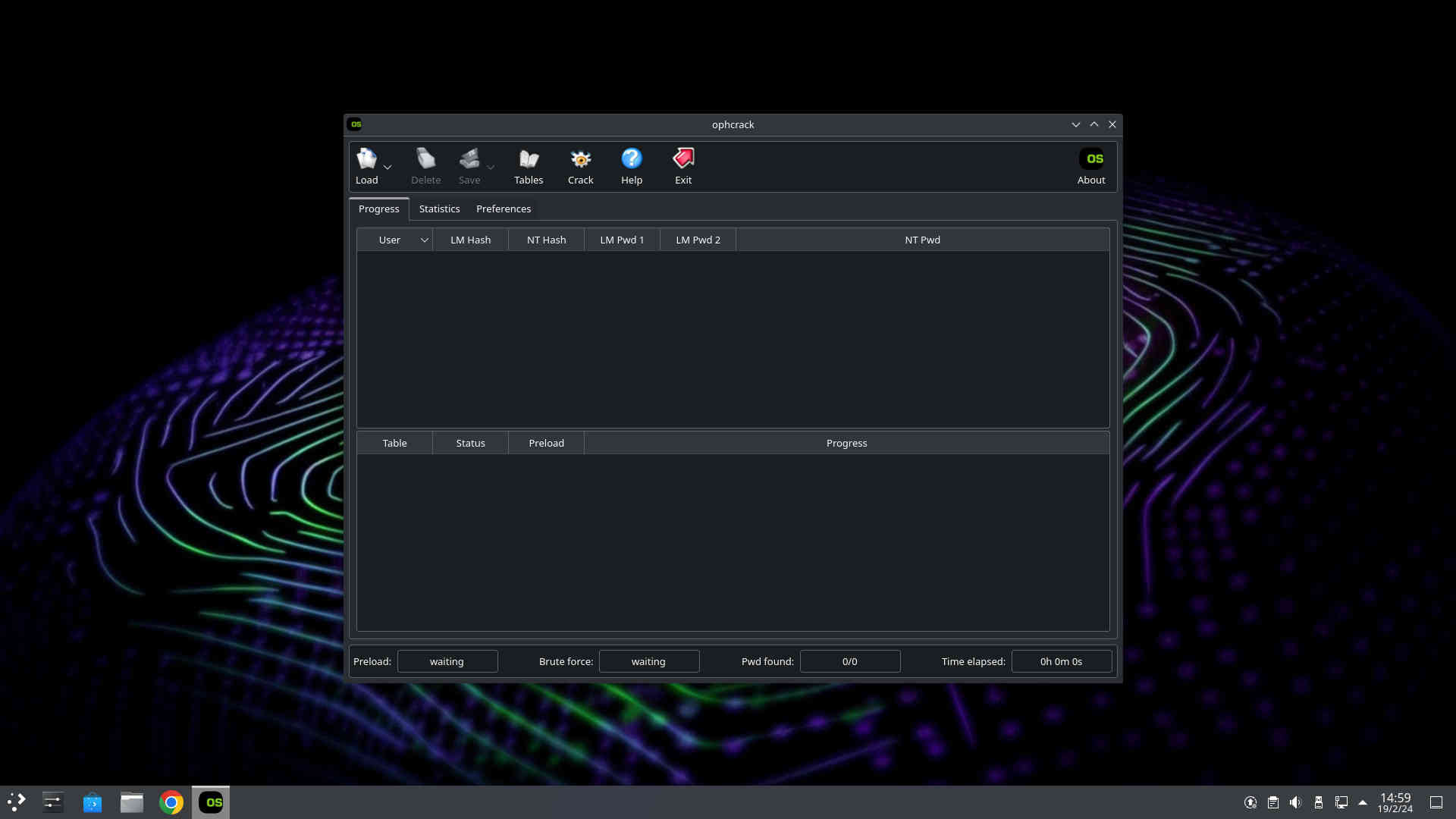

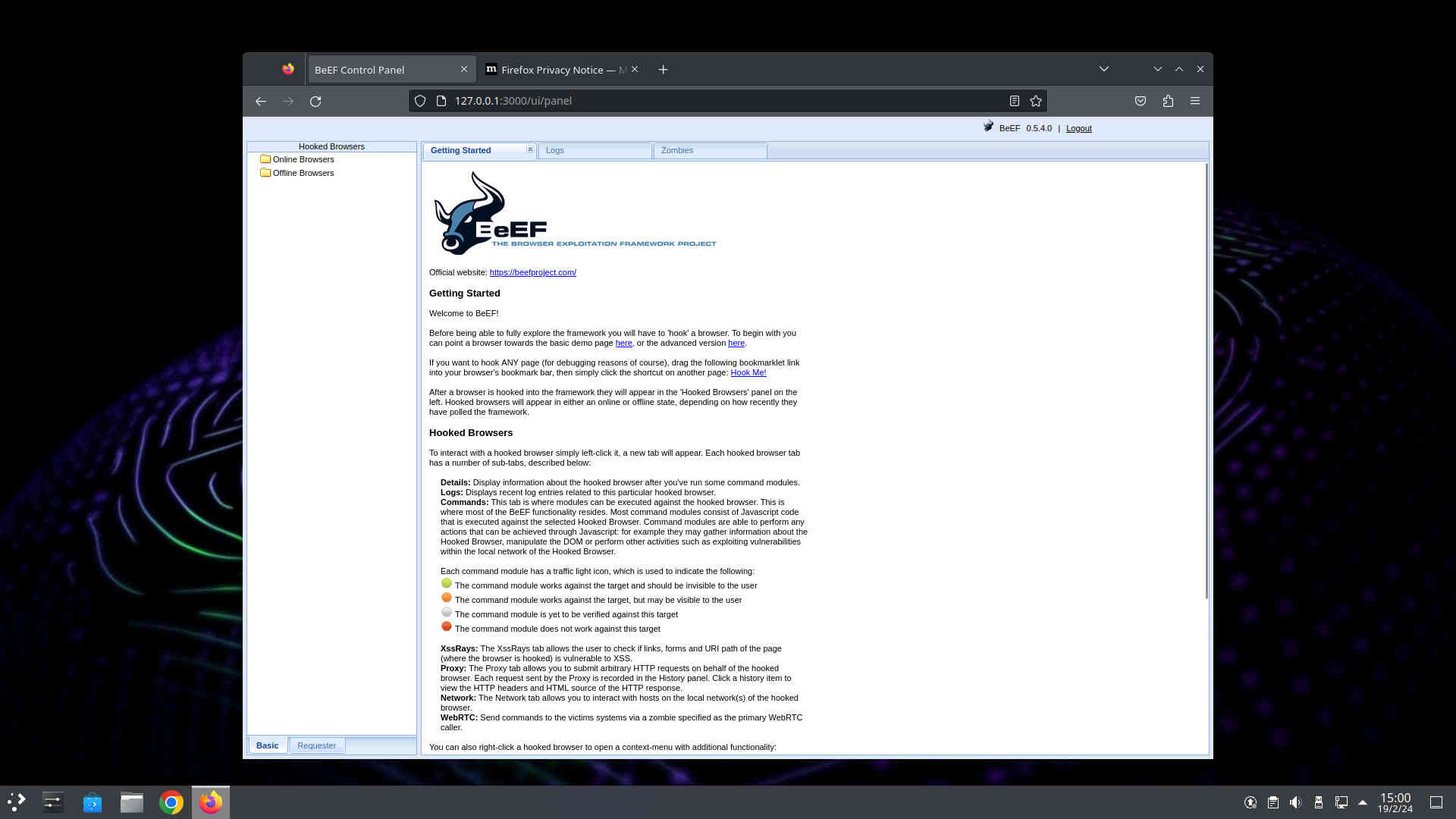

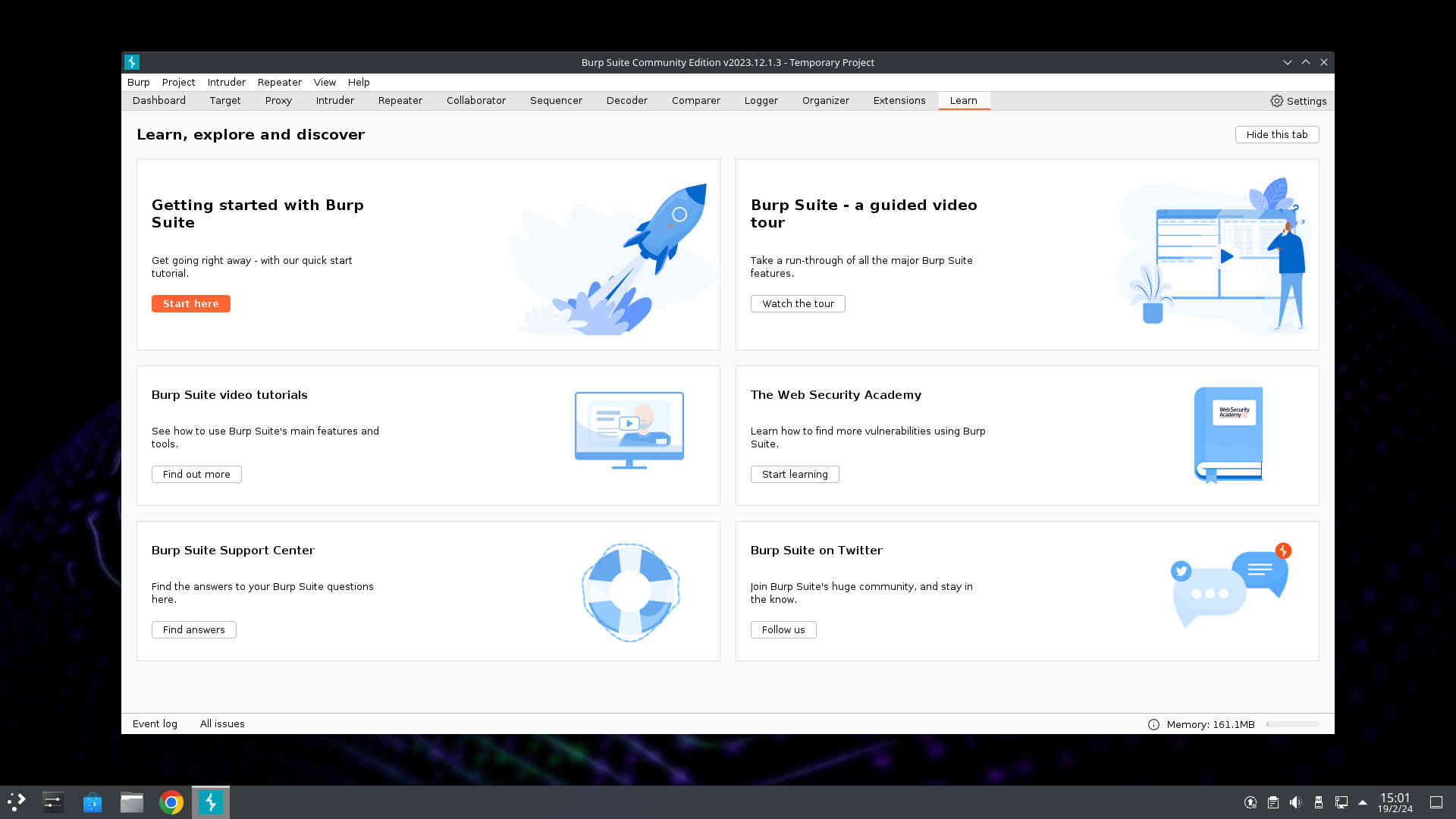

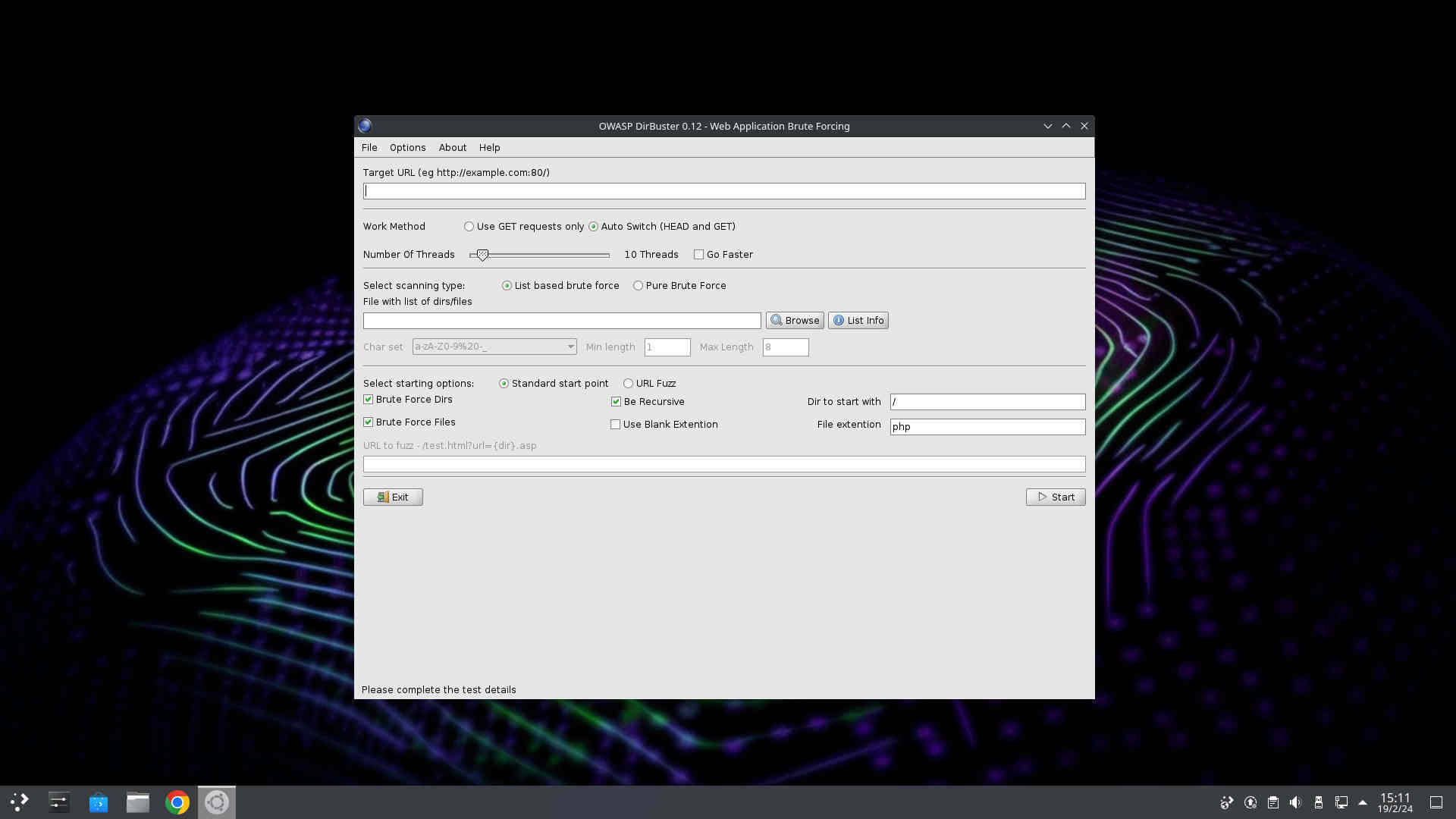

SnoopGod includes more than 800 pre-installed libraries and tools. For the updated list of tools you may check the repository at Github.

Aircrack

Apktool

BeEF XSS

Bettercap

Ettercap

GTK Hash

Guymager

Hydra

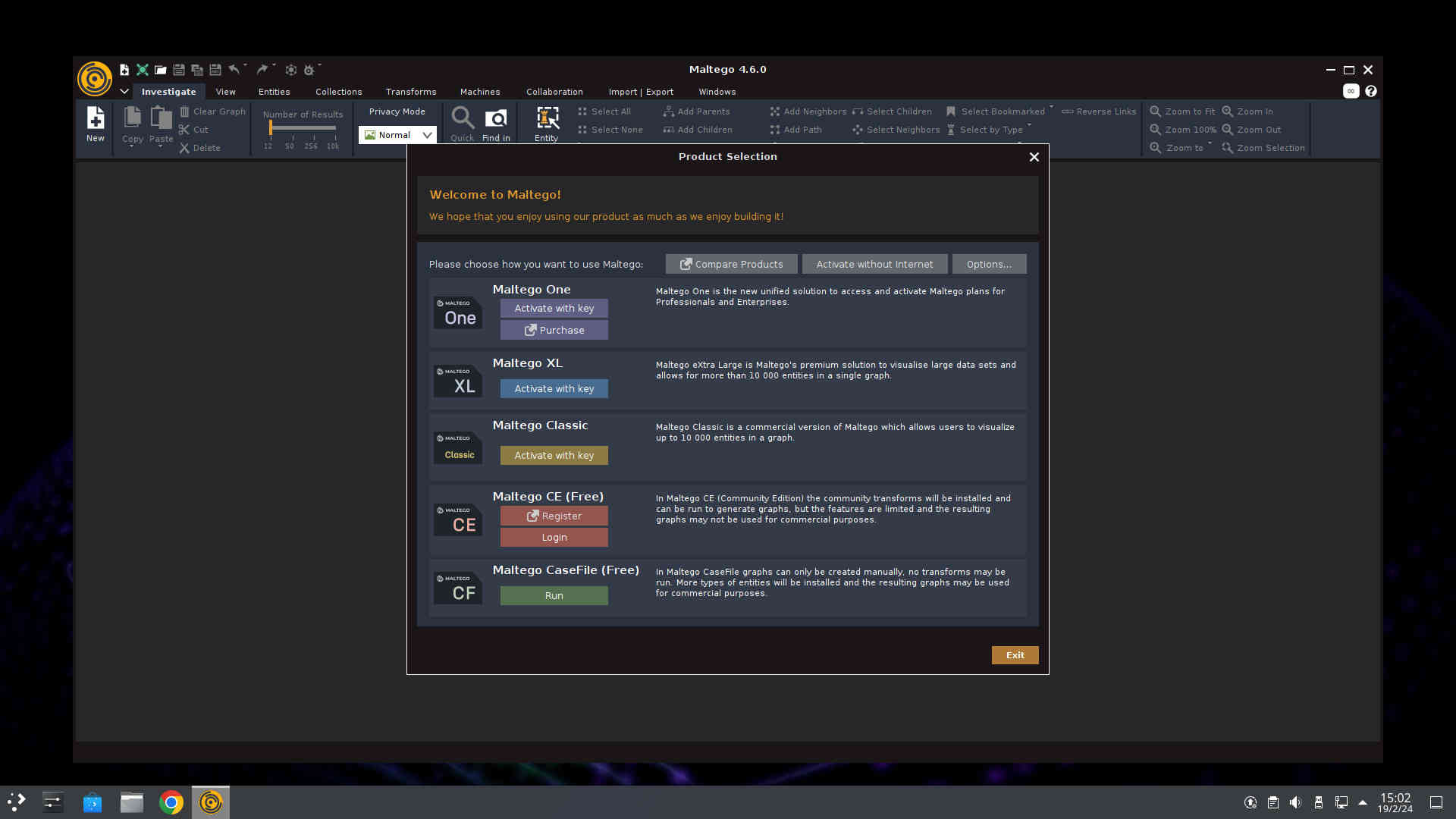

Maltego

Metasploit

Nmap

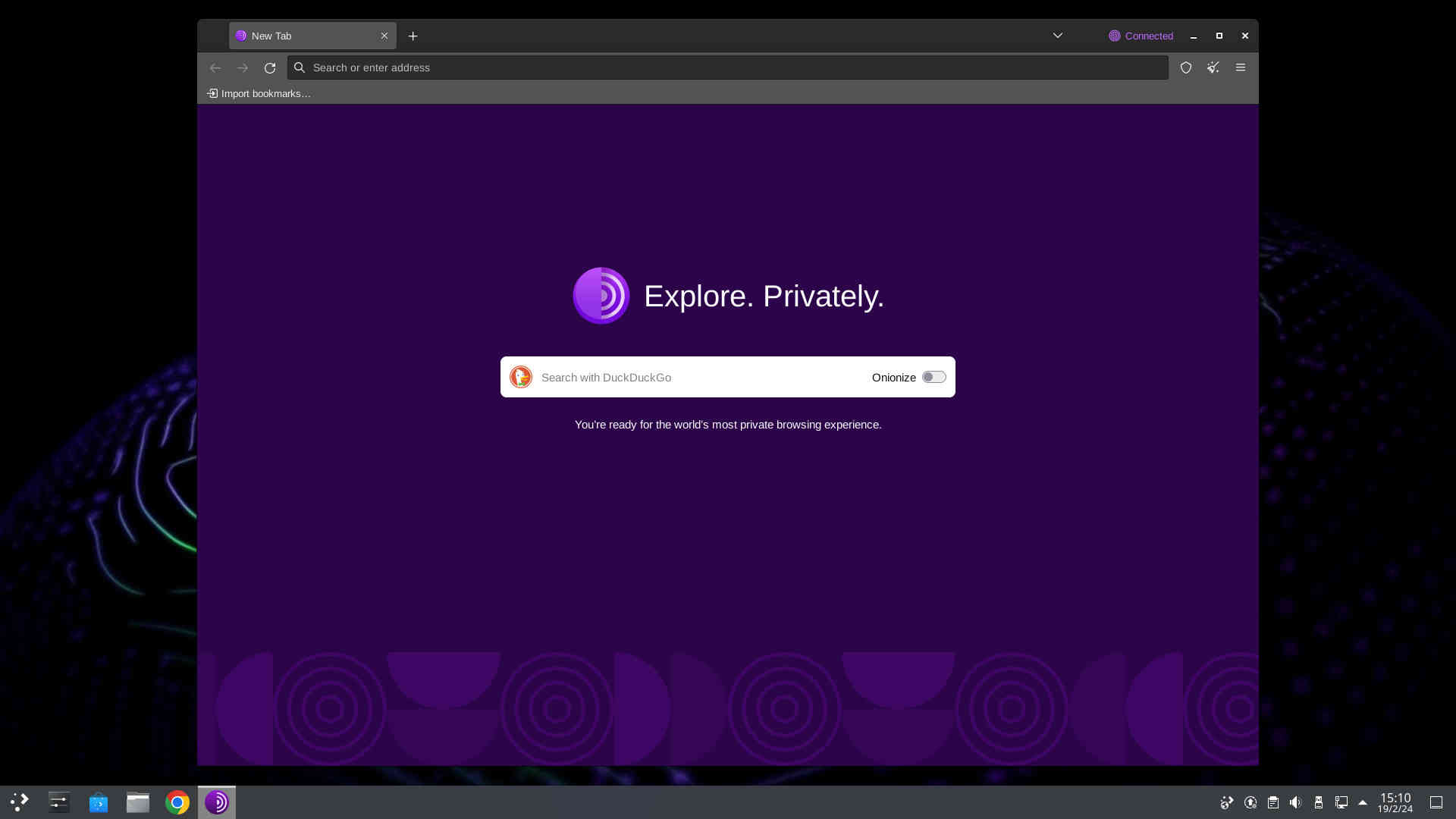

Tor Browser

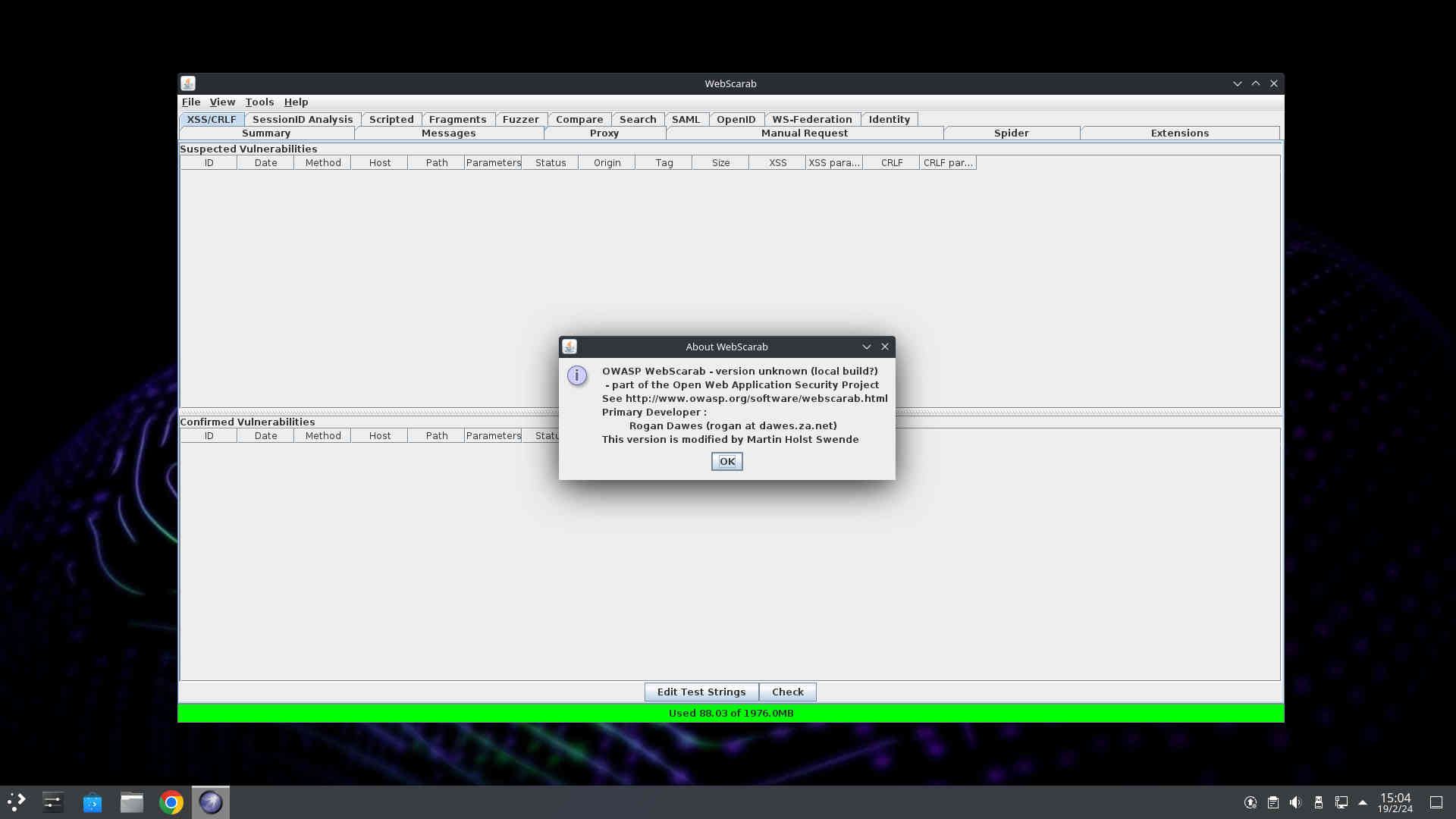

Webscarab

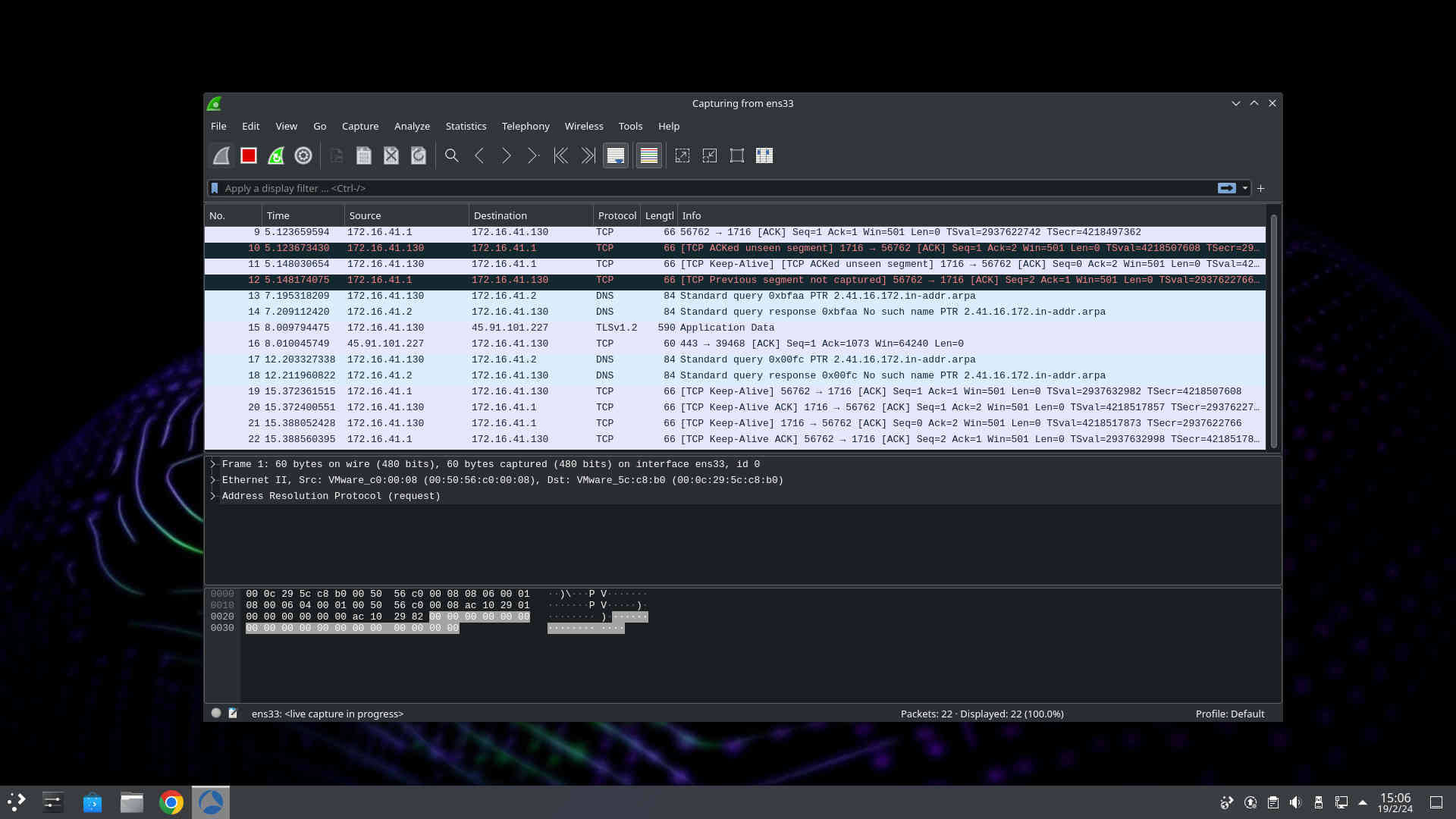

Wireshark

WPscan

Zaproxy

Cook with love using

The latest technology

SnoopGod is built on the latest technologies in the industry, ensuring that users have access to the most advanced, cutting edge systems. This provides all users access to the best possible tools for cybersecurity.